I am a final-year PhD candidate in Computer Science at the University of Virginia, advised by Prof. Seongkook Heo. Before that, I obtained M.S. in Statistics from University of Virginia, and B.A. in Sociology from Shanghai University.

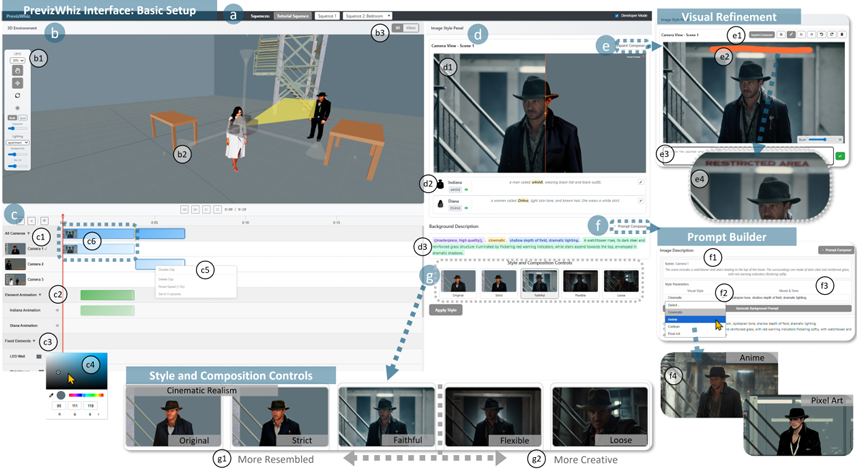

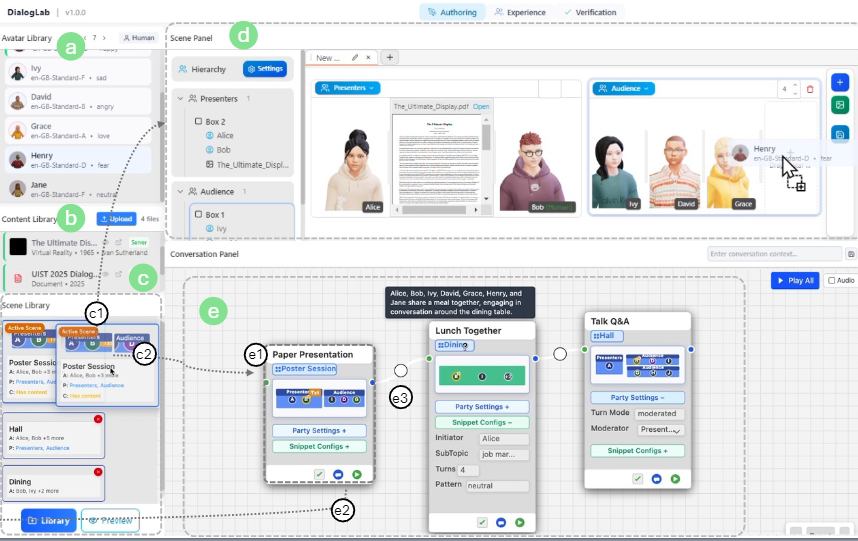

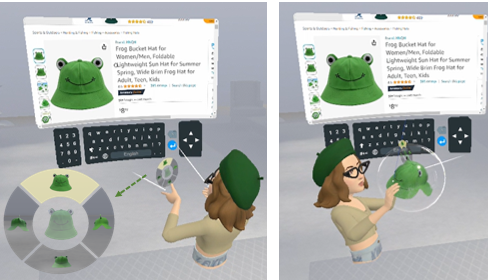

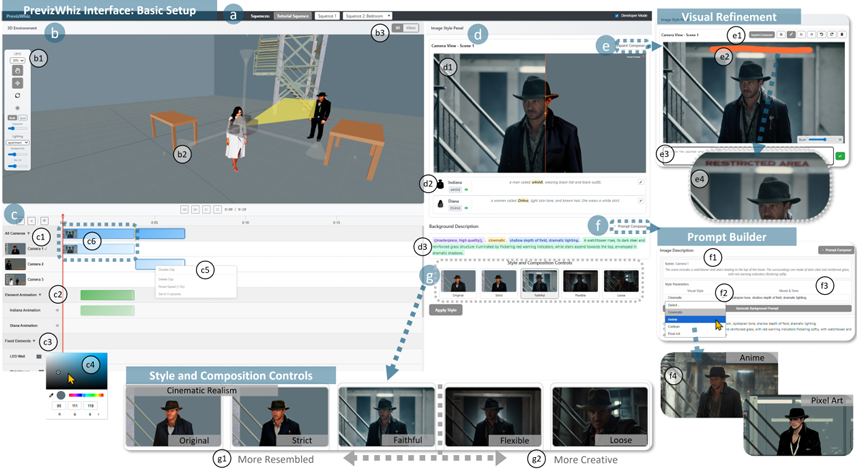

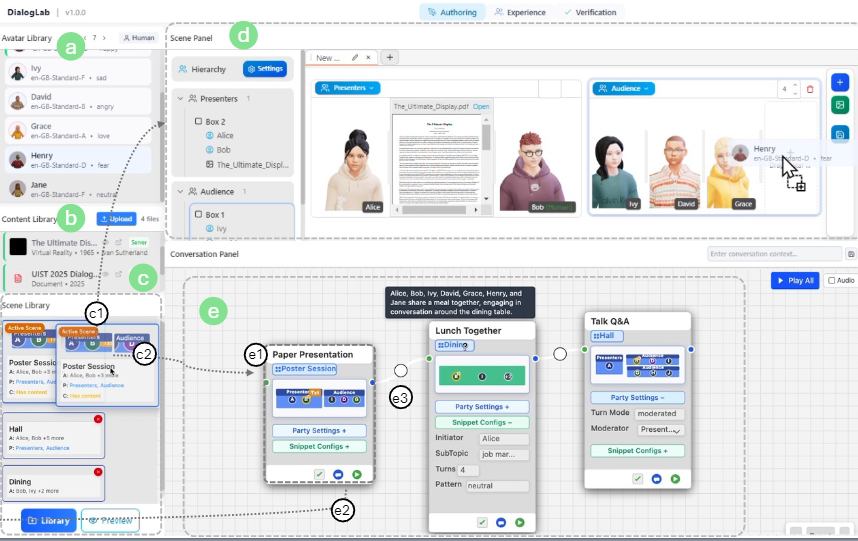

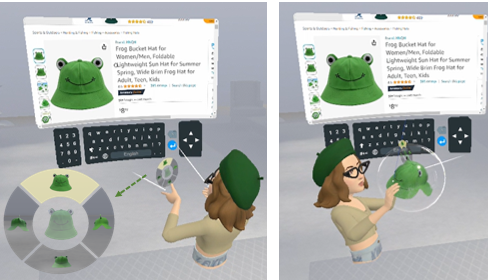

I develop and study interactive systems that engineer

both the scale and nuance of communication, bridging behavioral theories of human interaction with

AI-driven authoring and generative tools. These systems span conversational interfaces, avatar-based

simulations, and multimodal media.

My work has been recognized and supported by a Google PhD Fellowship and selection as an MIT EECS Rising Star.

During the summers, I've interned at  Microsoft Research,

Google, and

Microsoft Research,

Google, and  Autodesk Research.

Autodesk Research.

|

Design and source code from Link |